Data Pipeline Architecture refers to the systematic approach of designing and managing the flow of data from various sources to destinations. This architecture transforms raw data into valuable insights. Organizations need a robust data pipeline to handle diverse data sources efficiently. An optimal architecture automates data movement and transformation, reducing errors and ensuring data integrity. By understanding data pipelines, organizations can harness their full potential. This knowledge allows firms to achieve efficiency, reliability, and maintainability in their data processes.

Understanding Data Pipeline Architecture

Definition and Importance

What is Data Pipeline Architecture?

Data Pipeline Architecture refers to the design and management of systems that move data from various sources to destinations. This architecture encompasses processes, tools, and infrastructure to automate data flow. Data Pipeline Architecture ensures data consistency, reliability, and readiness for analysis. Organizations rely on this architecture to handle diverse data efficiently.

Why is it Important?

Data Pipeline Architecture holds critical importance in modern data-driven environments. It enables organizations to transform raw data into actionable insights. Efficient data pipelines reduce errors and ensure data integrity. A well-designed architecture enhances data processing speed and accuracy. This leads to better decision-making and operational efficiency.

Key Components

Data Sources

Data sources form the foundation of any Data Pipeline Architecture. These sources include databases, APIs, file systems, and streaming data. Each source provides raw data that enters the pipeline. Identifying and integrating these sources is crucial for a robust architecture.

Data Ingestion

Data ingestion involves collecting and importing data from various sources into the pipeline. This process can occur in real-time or batch mode. Tools like Apache Kafka and AWS Kinesis facilitate efficient data ingestion. Proper ingestion ensures that data flows smoothly into subsequent stages.

Data Processing

Data processing transforms raw data into a usable format. This stage includes cleaning, filtering, aggregating, and enriching data. Technologies like Apache Spark and Flink are commonly used for data processing. Effective processing ensures data quality and prepares it for analysis.

Data Storage

Data storage involves saving processed data in a structured manner. This can include databases, data warehouses, and data lakes. Solutions like Amazon S3, Google BigQuery, and Snowflake provide scalable storage options. Proper storage ensures data availability and accessibility for analysis.

Data Visualization

Data visualization represents the final stage in Data Pipeline Architecture. This stage involves presenting data in graphical formats like charts and dashboards. Tools like Tableau, Power BI, and Looker facilitate data visualization. Effective visualization helps stakeholders understand and interpret data insights.

Building Blocks of Data Pipelines

Core Components

Extract, Transform, Load (ETL)

Extract, Transform, Load (ETL) forms the backbone of many data pipelines. The ETL process begins with data extraction from various sources such as databases, APIs, and file systems. Extraction involves gathering raw data in its original format. The next step, transformation, converts this raw data into a usable format by cleaning, filtering, and aggregating it. Transformation ensures data quality and consistency. Finally, the load stage involves storing the transformed data in a target system like a data warehouse or data lake. ETL tools like Apache NiFi and Talend facilitate these processes, ensuring efficient data movement and transformation.

Data Orchestration

Data Orchestration manages the sequence and execution of data processing tasks within a pipeline. Orchestration tools like Apache Airflow and AWS Step Functions allow users to define workflows as directed acyclic graphs (DAGs). These tools enable dynamic pipeline generation and modification. They also support parallel task execution, making them suitable for complex data processing scenarios. Effective orchestration ensures that data flows smoothly through the pipeline stages, maintaining data integrity and consistency.

Data Quality and Validation

Data Quality and Validation are critical for ensuring the reliability of data pipelines. This component involves checking data for accuracy, completeness, and consistency. Tools like Great Expectations and Deequ provide automated data validation and quality checks. These tools help identify and rectify data anomalies early in the pipeline. High data quality ensures that downstream processes receive accurate and reliable data, leading to better analytical outcomes.

Supporting Technologies

Databases and Data Warehouses

Databases and Data Warehouses serve as the primary storage solutions in Data Pipeline Architecture. Databases like MySQL and PostgreSQL store structured data for transactional purposes. Data warehouses like Amazon Redshift and Google BigQuery store large volumes of processed data for analytical purposes. These storage solutions offer scalability and high performance, ensuring data availability and accessibility for analysis.

Stream Processing Frameworks

Stream Processing Frameworks handle real-time data processing within a pipeline. Frameworks like Apache Kafka and Apache Flink process data streams as they arrive, enabling real-time analytics and decision-making. These frameworks support high-throughput and low-latency data processing, making them ideal for time-sensitive applications. Stream processing ensures that data remains current and actionable throughout the pipeline.

Batch Processing Tools

Batch Processing Tools manage the processing of large data sets in batches. Tools like Apache Hadoop and AWS Glue perform batch processing tasks, transforming and loading data at scheduled intervals. Batch processing is suitable for scenarios where real-time processing is not required. These tools provide robust and scalable solutions for handling large volumes of data efficiently. Batch processing ensures that data pipelines can handle periodic data loads without compromising performance.

Diagrams and Visual Representations

Importance of Diagrams

Simplifying Complex Architectures

Diagrams simplify complex data pipeline architectures. Visual representations break down intricate systems into understandable components. This approach helps data engineers and stakeholders grasp the architecture's structure. Diagrams highlight the flow of data and the relationships between different components. Simplified visuals reduce the cognitive load required to understand complex systems.

Enhancing Communication

Effective communication plays a crucial role in data pipeline projects. Diagrams enhance communication among team members and stakeholders. Visual aids bridge the gap between technical and non-technical audiences. Clear diagrams ensure that everyone understands the project's scope and objectives. Enhanced communication leads to better collaboration and more informed decision-making.

Common Diagram Types

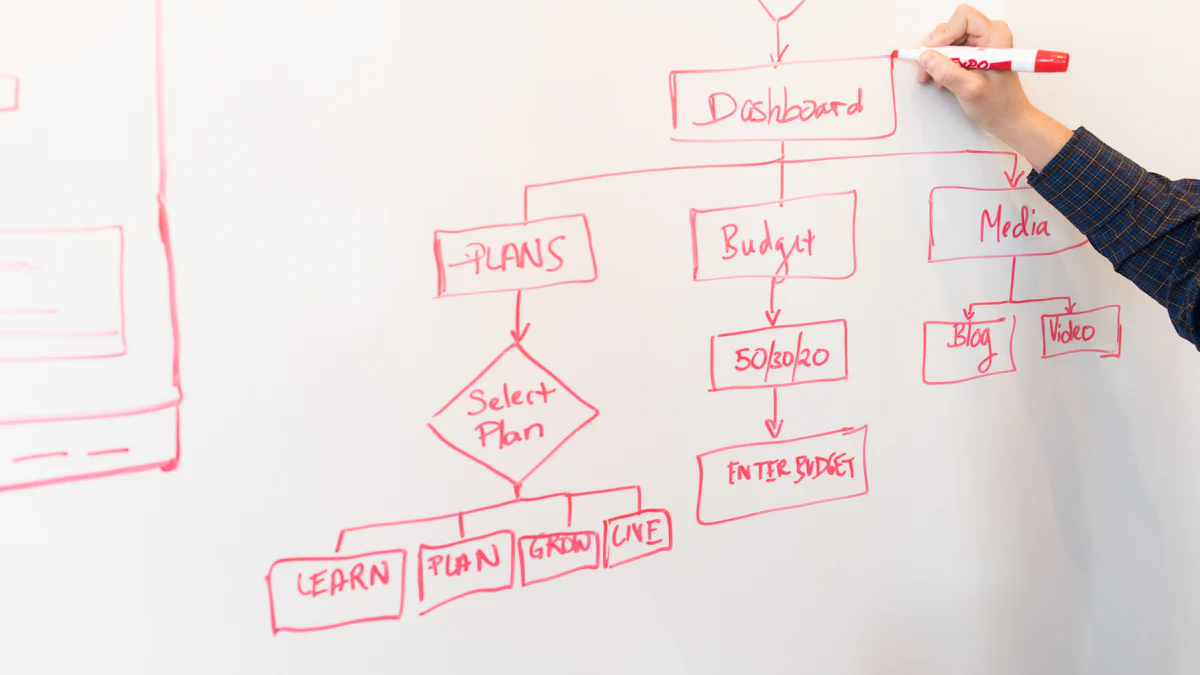

Flowcharts

Flowcharts represent the sequential flow of data through a pipeline. These diagrams use symbols to denote different stages and processes. Arrows indicate the direction of data movement. Flowcharts provide a high-level overview of the pipeline's structure. Data engineers use flowcharts to plan and document the pipeline's workflow.

Architecture Diagrams

Architecture diagrams offer a detailed view of the data pipeline's components. These diagrams illustrate the relationships between data sources, processing units, and storage solutions. Architecture diagrams often include annotations to explain each component's role. Detailed visuals help identify potential bottlenecks and areas for optimization. Data engineers rely on architecture diagrams for designing and troubleshooting pipelines.

Sequence Diagrams

Sequence diagrams depict the interaction between different components over time. These diagrams show how data flows from one stage to another. Sequence diagrams highlight the timing and order of operations within the pipeline. Data engineers use sequence diagrams to ensure that processes occur in the correct sequence. Accurate sequencing prevents data processing errors and ensures smooth pipeline operation.

Patterns in Data Pipeline Architecture

Common Design Patterns

Lambda Architecture

Lambda Architecture combines batch and real-time data processing. This pattern handles large volumes of data efficiently. The batch layer processes data in bulk, ensuring accuracy. The speed layer processes data in real-time, providing low-latency updates. Combining these layers ensures both accuracy and speed. Tools like Apache Hadoop and Apache Storm support Lambda Architecture.

Kappa Architecture

Kappa Architecture simplifies data processing by focusing on real-time data. This pattern eliminates the batch layer, relying solely on stream processing. Real-time data flows through a single pipeline, reducing complexity. Kappa Architecture suits applications requiring immediate data insights. Technologies like Apache Kafka and Apache Flink enable Kappa Architecture.

Data Lakehouse

Data Lakehouse merges the benefits of data lakes and data warehouses. This pattern stores raw and processed data in a unified repository. Data Lakehouse supports both structured and unstructured data. This approach provides flexibility for various analytical needs. Solutions like Delta Lake and Apache Iceberg implement Data Lakehouse architecture.

Best Practices

Scalability

Scalability ensures that data pipelines handle growing data volumes. Designing for scalability involves using distributed systems. Tools like Apache Spark and Kubernetes offer scalable solutions. Monitoring and optimizing resource usage maintain performance. Scalable pipelines adapt to changing data demands.

Fault Tolerance

Fault tolerance guarantees data pipeline reliability. Implementing fault tolerance involves redundancy and error handling. Techniques like data replication and checkpointing enhance resilience. Tools like Apache Kafka and AWS Step Functions support fault-tolerant designs. Reliable pipelines minimize data loss and downtime.

Security

Security protects data throughout the pipeline. Implementing security involves encryption and access controls. Ensuring data confidentiality and integrity prevents unauthorized access. Tools like AWS IAM and HashiCorp Vault provide robust security measures. Secure pipelines safeguard sensitive information.

The blog has covered the essential aspects of data pipeline architecture. A well-designed data pipeline architecture ensures efficient data flow and processing. Organizations must apply the discussed patterns and best practices to enhance their data operations. Effective data pipelines lead to better decision-making and operational efficiency. For further understanding, readers should explore additional resources on data engineering and architecture.