S3 Is Slow; You Need a Cache

In recent years, many modern data systems have been designed around Amazon S3 or other object storage services. Projects such as Neon, TiKV, and Warpstream all treat S3 as their primary storage layer. This trend is not accidental. Object storage offers practically unlimited capacity, strong durability, and very low cost compared to block storage or SSDs. For database developers, it also removes the burden of managing disks directly and allows them to focus on building features while relying on the cloud provider for durability and scalability. With these advantages, S3 has become the de facto backbone for new database and data infrastructure projects.

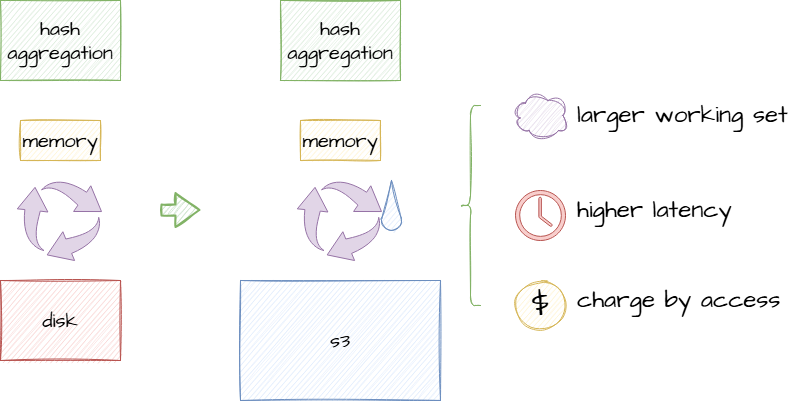

However, S3 is not built for low-latency access. Every read requires a network round trip, and every request adds cost. Latency is already orders of magnitude higher than memory or local SSD, and when applications repeatedly fetch the same data these delays accumulate, making systems sluggish while inflating storage bills. Many systems that rely solely on S3 face this constant tradeoff between low cost and acceptable performance.

For RisingWave, the challenge is even greater. As a streaming system, it executes continuous queries where results depend on processing data in real time. A single S3 access may already add hundreds of milliseconds. If multiple S3 reads are needed within one second, the delays compound rapidly until they become unacceptable. This extreme sensitivity to latency is why we felt the pain of S3 more acutely than others.

A cache provides a way to close this gap. By keeping hot data in memory and warm data on local disk, a cache reduces the number of requests sent to S3. This lowers latency, stabilizes performance, and reduces recurring storage costs. Applications no longer need to fetch the same data repeatedly from remote storage but can instead serve most queries locally while still relying on S3 for durability and scale. To make this practical for real-time systems like RisingWave, we built Foyer, a hybrid caching library in Rust that unifies memory and disk caching into one coherent layer.

Inside Foyer’s Architecture

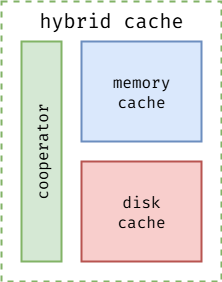

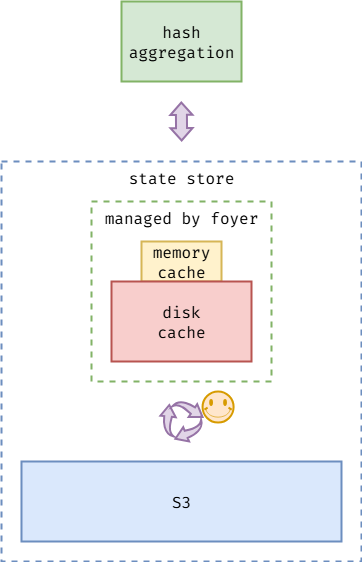

Now that we have seen why caching is critical for S3-based systems, the next question is how to design a cache that works at both the speed of memory and the scale of disk. Foyer answers this by combining multiple components into a single hybrid system. At a high level, it consists of three parts: a memory cache for low-latency access, a disk cache for expanded capacity, and a coordinator that unifies the two layers.

Memory Cache: The First Layer

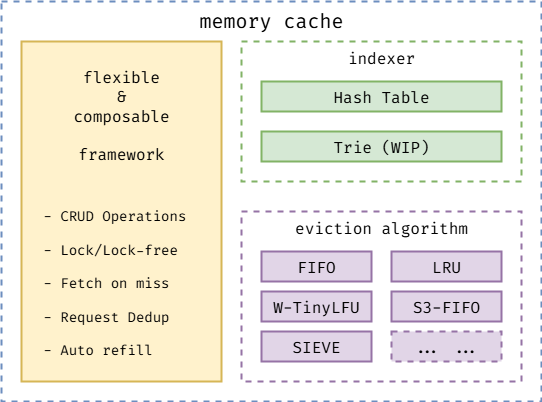

The memory cache, implemented in the foyer-memory crate, is the fastest path for lookups. It supports sharding to handle high concurrency and provides flexibility in eviction strategies, including LRU, FIFO, w-TinyLFU, S3-FIFO, and SIEVE. Each shard maintains its own index and eviction logic, which reduces contention under heavy load.

Foyer also optimizes for correctness and efficiency. When several requests for the same key arrive at the same time and the key is missing, only one fetch is issued, while the others wait for the result. This request deduplication avoids redundant trips to disk or remote storage. Rust’s zero-copy abstractions further reduce overhead by minimizing unnecessary serialization and copying.

Disk Cache: Extending the Working Set

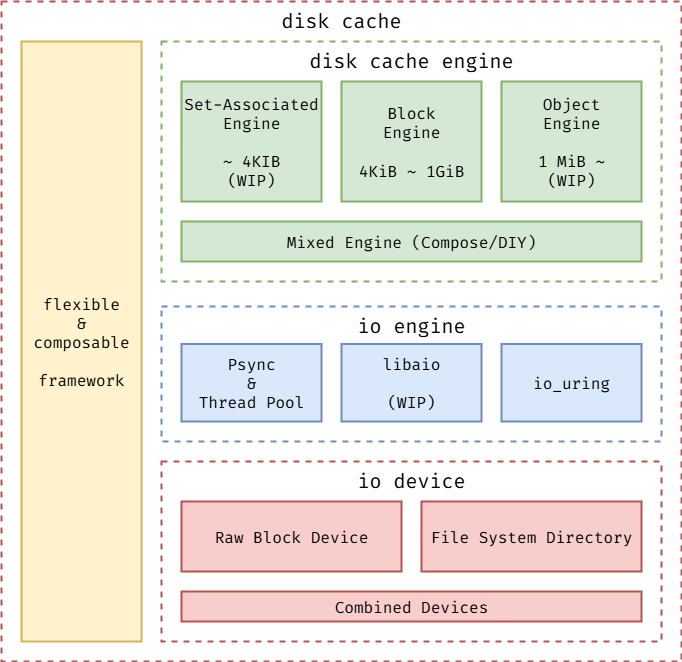

When memory does not contain the requested data, Foyer falls back to the disk cache. This layer is implemented in the foyer-storage crate and provides much larger capacity than RAM, without the latency of repeated S3 calls. The disk cache is modular, with multiple engines suited for different data profiles. The block engine is tuned for medium-sized records, the set-associative engine is designed for small entries, and an object engine is under development for large objects.

The I/O layer underneath is also flexible. Developers can select between synchronous reads and writes or modern asynchronous interfaces like io_uring. The storage backend itself can be a file, a raw block device, or a directory, giving operators the ability to adapt Foyer to their hardware and deployment model.

Coordinator: The Glue

The coordinator, inside the main foyer crate, ties memory and disk into a unified system. It promotes entries from disk to memory when they are accessed frequently, writes new data back after a miss, and ensures the API stays consistent regardless of which layer the data comes from. It also consolidates concurrent fetches to prevent storms of redundant requests when many clients look for the same key.

Modes of Operation

Foyer supports two distinct modes of operation: hybrid mode and memory-only mode.

In hybrid mode, both memory and disk caches are active. Hot data is served directly from memory, while warm data that does not fit in memory can still be retrieved from disk. Only when data is absent from both layers does Foyer issue a fetch to S3 or another remote source. Once the data is retrieved, it is written back to both disk and memory so that future requests are served locally. This design dramatically reduces the number of S3 calls and makes the system suitable for workloads where cost and latency must be controlled at scale.

In memory-only mode, the disk cache is disabled, and all operations are performed in memory. This mode is useful for smaller workloads, ephemeral jobs, or testing environments where persistence and larger capacity are unnecessary. Importantly, the API remains identical across both modes. Developers can start with a simple memory cache and later add disk caching without changing their application logic, which makes adoption smoother.

Benefits and Trade-offs

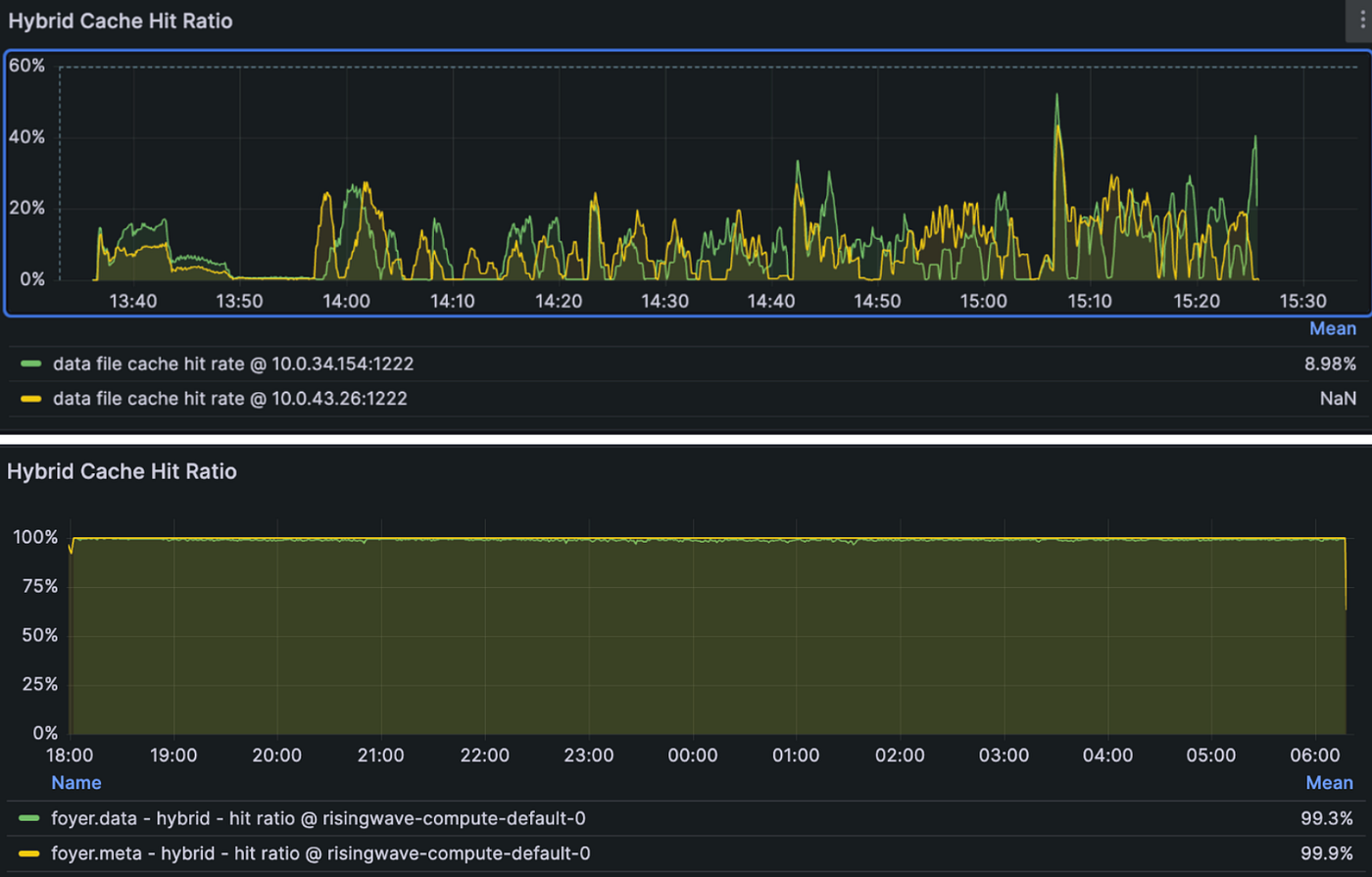

The hybrid cache design brings significant benefits. Latency improves because most queries are served locally. Cost is reduced because far fewer requests hit S3. Capacity increases since disk supplements RAM, allowing a much larger portion of the working set to stay near the application. Foyer also emphasizes configurability. Developers can choose eviction strategies, disk engines, and I/O mechanisms that fit their workloads. Built-in observability allows integration with Prometheus, Grafana, and OpenTelemetry so operators can monitor hit rates, latencies, and cache behavior in production.

At the same time, hybrid caching introduces trade-offs. The system is more complex than a simple in-memory cache, which means configuration and tuning are important. A poorly chosen eviction algorithm or disk engine can reduce effectiveness. Disk is slower than memory, so capacity planning must ensure that hot data stays in RAM to keep latency low. Monitoring is essential because silent cache degradation can easily ripple into application-level performance problems.

How RisingWave Uses Foyer in Production

RisingWave is a streaming database that was built from day one to use S3 as its primary storage. Unlike many systems that rely on S3 only for backups or cold data, RisingWave stores state, materialized views, operator outputs, and recovery logs directly in S3. This architecture makes the system highly elastic and cost-efficient, but it also brings real challenges for latency-sensitive workloads. Foyer plays a critical role in addressing these challenges.

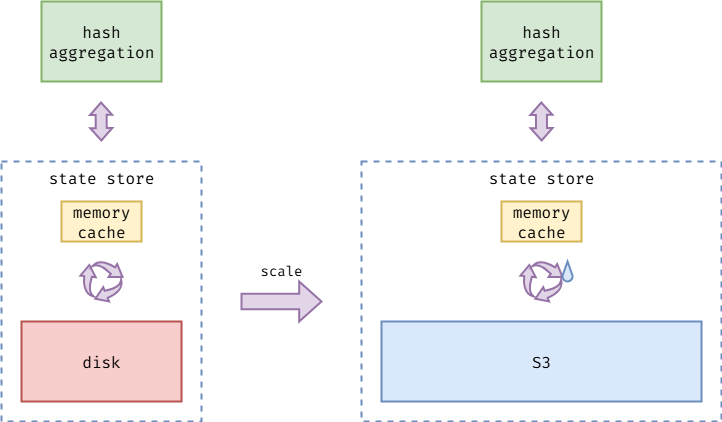

Without Foyer: frequent data swapping between memory and S3. Image Source: the author.

A Three-Tier Storage Architecture

RisingWave adopts a three-tier storage design that integrates memory, local disk, and S3:

Memory holds the hottest data and provides the fastest response path.

Local disk acts as an intermediate cache, buffering data that does not fit in memory but is accessed frequently. This layer greatly reduces the number of requests sent to S3.

S3 remains the durable and scalable storage layer, but it is consulted only when data is not found in either memory or disk.

Foyer is the component that manages the disk cache. It coordinates the interaction between memory and S3, ensuring that most queries can be served without directly hitting object storage.

Controlling Latency and Reducing Costs

In practice, RisingWave observed that even simple S3 reads often have a time-to-first-byte of 30 ms, and under load this can stretch to 100–300 ms. For stream processing workloads, where operators continuously read and update state, such delays quickly accumulate. Without a cache, each operator could incur multiple S3 calls per second, making real-time performance impossible.

With Foyer, RisingWave significantly reduces the number of direct S3 accesses. Most requests are served either from memory or from disk, with only a small fraction reaching S3. This keeps query latency within acceptable bounds while also avoiding the explosion of costs caused by frequent small S3 requests.

Press enter or click to view image in full size

Cache Management and Strategies

The integration of Foyer into RisingWave is not limited to storing blocks on disk. Several strategies enhance its effectiveness:

Block-level reads: multiple logical rows are packed into larger blocks, reducing the number of S3 requests.

Sparse indexes: each table or materialized view maintains indexes that point to the relevant S3 objects and offsets, allowing the system to skip unnecessary lookups.

Prefetching: when the query plan expects to scan multiple blocks, subsequent blocks are proactively loaded into cache.

Cache hydration: newly written data is immediately preloaded into memory and disk caches so that subsequent reads do not fall back to S3.

Deep Integration with RisingWave

Foyer is not a bolt-on component but a deeply integrated part of RisingWave’s storage stack.

It manages disk-level I/O, including read and write scheduling as well as cache invalidation and recovery.

It feeds back information about access patterns, helping RisingWave adapt caching strategies dynamically.

It works closely with RisingWave’s compaction process, ensuring that recent or frequently accessed data remains cached while older cold data is pushed out.

Through this design, Foyer makes it possible for RisingWave to combine the elasticity and cost benefits of S3 with the low-latency requirements of stream processing.

Conclusion

S3 has become the foundation of modern data systems because of its durability, scalability, and low cost. Yet its high and unpredictable latency makes it unsuitable for workloads that demand fast and consistent response times. Many systems live with this tradeoff, but for latency-sensitive environments such as stream processing, the cost is too high.

Hybrid caching provides a way to bridge this gap. By layering memory and disk in front of S3, systems can keep hot and warm data close to the application, while still using S3 as the durable source of truth. The result is lower latency, lower cost, and a storage model that scales naturally with cloud infrastructure.

Foyer was created to meet these needs in RisingWave, where real-time processing cannot tolerate repeated S3 round trips. By combining memory and disk caching under one coherent design, Foyer allows RisingWave to achieve both the elasticity of S3 and the performance of a local database.

The lesson extends beyond RisingWave. Any system built on S3 or other object stores can benefit from the same approach. Hybrid caching is no longer just an optimization but a requirement for making object-store-based architectures viable for real-time and high-throughput workloads.

Foyer GitHub repo: https://github.com/foyer-rs/foyer

RisingWave GitHub repo: https://github.com/risingwavelabs/risingwave