Stream processing has redefined how industries handle real-time data, addressing the limitations of traditional batch systems. The demand for instant insights has made technologies like Apache Kafka and Apache Flink indispensable. These frameworks deliver exactly-once processing and fault tolerance, ensuring reliable performance at scale. Enterprises increasingly rely on real-time data synchronization to enhance integration strategies. The growing adoption of real-time data lakes and warehouses highlights the critical role of stream processing in modern applications, where scalability and precision are paramount.

Key Takeaways

Stream processing helps analyze data instantly. This lets businesses act fast and work better.

Tools like Apache Kafka and Flink can grow with needs. They also handle errors well, making them great for big data.

Real-time uses in money, health, and shopping improve services. They make things better for customers and businesses.

Using AI with stream processing gives quick insights. It helps react faster to changes and sparks new ideas.

Cloud-based tools are flexible and save money. They let companies use stream processing without managing lots of hardware.

Understanding Stream Processing

What Is Stream Processing?

Stream processing refers to the continuous analysis and processing of data as it flows through a system. Unlike traditional batch processing, which handles data in chunks, stream processing operates on real-time data streams, enabling immediate insights and actions. This approach is essential for applications requiring low latency and high responsiveness.

| Key Concept | Explanation |

| Latency | Results must be generated in real-time to ensure relevance, especially in critical applications. |

| Time Management | Differentiates between event time (data occurrence) and processing time (system handling), impacting result accuracy. |

| Scalability | Distributed computing enhances scalability, making it possible to handle large, unbounded data streams efficiently. |

| Messaging Semantics | Ensures data integrity with delivery guarantees like "exactly once" processing, critical for reliable stream processing. |

Modern frameworks like Apache Kafka and Flink have revolutionized stream processing by offering robust platforms for real-time analytics and stateful computations. These frameworks ensure scalability, fault tolerance, and low-latency processing, making them indispensable in contemporary computing.

Why Real-Time Data Processing Matters

Real-time data processing plays a pivotal role in industries where timely decisions are critical. For instance, healthcare systems rely on real-time patient monitoring to detect anomalies and prevent emergencies. Similarly, financial institutions use real-time fraud detection to safeguard transactions.

Edge computing has further enhanced real-time data processing by reducing latency and improving efficiency. Research shows that edge computing not only accelerates data processing but also strengthens security and fosters innovation in sectors like finance and healthcare.

Key Applications of Stream Processing

Stream processing has transformed various industries by enabling real-time insights and automation. Some notable applications include:

Finance: Real-time fraud detection and algorithmic trading.

Healthcare: Continuous patient monitoring and diagnostics.

eCommerce: Personalized recommendation engines.

Transportation: Fleet management and route optimization.

Manufacturing: Predictive maintenance to minimize downtime.

Cybersecurity: Anomaly detection to identify potential threats.

These use cases highlight the versatility and importance of stream processing in modern applications. By leveraging real-time data, organizations can improve efficiency, enhance customer experiences, and drive innovation.

The Origins of Stream Processing Frameworks

The Era of Batch Processing

Before the advent of real-time data handling, batch processing dominated the landscape of data management. This method involved collecting data over a period, processing it in bulk, and delivering results after completion. While effective for static datasets, batch processing struggled to meet the demands of dynamic, time-sensitive applications. Industries like finance and manufacturing required faster insights to make critical decisions.

The early 2000s marked a turning point. Companies began exploring alternatives to batch processing, driven by the need for real-time decision-making. Message queues emerged as a solution, enabling transactional data to be communicated in near real-time. However, these systems lacked the scalability and fault tolerance required for large-scale applications.

| Year | Milestone Description |

| 2002 | The first academic paper on stream processing was published. |

| 2004 | The MapReduce paper was published, influencing stream processing frameworks. |

| 2000s | Companies began to commercialize stream processing technology, particularly in finance. |

Batch processing laid the foundation for modern data handling techniques. Its limitations, however, highlighted the need for more agile and responsive systems, paving the way for real-time innovations.

Early Innovations in Real-Time Data Handling

The transition from batch to real-time processing began with experimental approaches. Researchers and engineers developed models to monitor production processes in real time. For example, a multivariate data analysis model was created using 26 process parameters and three quality attributes. This model enabled online monitoring of production processes, continuously improving accuracy with new data.

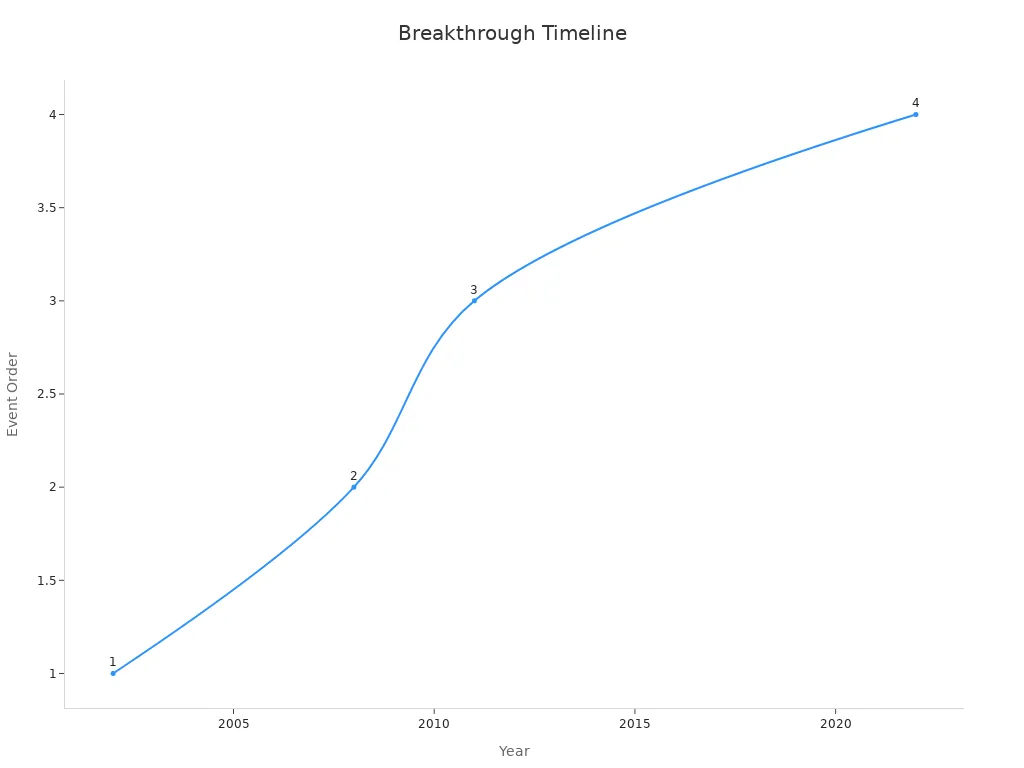

In 2008, Google published the Millwheel paper, which introduced fault-tolerant stream processing at an internet scale. This innovation demonstrated the potential of low-latency data processing for applications like search indexing and ad targeting. Around the same time, pioneering companies like StreamBase started commercializing stream processing technology, particularly on Wall Street.

| Year | Innovation | Description |

| 2002 | Models and Issues in Data Stream Systems | Defined a new model for continuous, rapid data processing. |

| 2008 | Millwheel: Fault-Tolerant Stream Processing | Introduced scalable, low-latency data processing for internet applications. |

| 2011 | Apache Kafka is open sourced | Became a widely used platform for distributed event streaming. |

These early innovations laid the groundwork for modern stream processing frameworks. They demonstrated the feasibility of real-time data handling and inspired further advancements in the field.

The Emergence of Event-Driven Architectures

The rise of event-driven architectures marked a significant milestone in the evolution of stream processing. Unlike traditional systems that processed data in predefined intervals, event-driven architectures responded to individual events as they occurred. This approach enabled systems to handle unbounded data streams with minimal latency.

Apache Kafka, open-sourced in 2011, became a cornerstone of event-driven architectures. Its distributed design allowed organizations to process and analyze data streams in real time. By 2022, the Stream Community—a non-commercial group of developers and scientists—began collaborating on stream processing technology, further advancing the field.

Event-driven architectures revolutionized industries by enabling real-time analytics and automation. They provided the scalability and fault tolerance needed to process vast amounts of data, making them indispensable in modern computing.

The Shift to Real-Time Processing

Transitioning from Batch to Stream Processing

The shift from batch processing to stream processing marked a pivotal moment in data architecture evolution. Traditional batch systems processed data in fixed intervals, often leading to delays in actionable insights. Stream processing, on the other hand, enabled real-time data handling, significantly reducing latency and improving efficiency.

A comparison of batch and stream processing architectures highlights the transformation:

| Aspect | Batch Processing Architecture | Stream Processing Architecture |

| Log Upload | Logs uploaded to S3 | Logs uploaded to S3, triggering events for processing |

| Processing Frequency | Hourly processing, leading to delays of up to 60 minutes | Near real-time processing, reducing delays to a few minutes |

| Data Transfer Method | Storage Transfer Service used for hourly data transfer | Event-driven triggers for immediate processing |

| Cost Efficiency | Inefficiencies due to reprocessing of files | Reduced costs by about 10% with event-driven architecture |

| Key Improvements | Increased batch frequency could improve performance | Immediate log reflection and reduced costs through optimization |

This transition allowed businesses to improve log reflection times from 60 minutes to just six minutes. Additionally, organizations achieved approximately 10% cost savings by adopting event-driven architectures. Stream processing has become essential for modern applications, enabling continuous data flows and immediate decision-making.

Foundational Frameworks: Apache Storm and Samza

Apache Storm and Samza were among the first frameworks to establish the foundation for stream processing. Storm, introduced in 2011, gained recognition for its ability to process over a million tuples per second per node. Its architecture, featuring bolts and spouts, allowed independent and linear scalability to meet growing data demands. Storm also ensured fault tolerance by storing state in ZooKeeper, enabling recovery from node failures without catastrophic consequences.

Key metrics of Apache Storm include:

| Metric | Description |

| Processing Speed | Processes over a million tuples per second per node. |

| Scalability | Bolts and spouts scale independently and linearly. |

| Fault Tolerance | Recovers from node failures using ZooKeeper for state storage. |

| Data Processing Guarantee | Guarantees data processing even if nodes fail or messages are lost. |

| Exactly-Once Processing | Trident provides exactly-once processing semantics. |

Apache Samza, tightly integrated with Kafka, leveraged Kafka's architecture for fault tolerance and state storage. It became a preferred choice for applications requiring robust buffering capabilities. Both frameworks demonstrated the potential of stream processing for handling real-time workloads with strict latency requirements.

Apache Kafka and the Revolution in Data Streaming

Apache Kafka revolutionized stream processing by introducing a distributed event streaming platform capable of handling massive data volumes. Its versatility made it a cornerstone for industries like retail, finance, and logistics. For instance, Migros utilized Kafka to personalize customer journeys and optimize stock levels. Erste Group employed Kafka to build fraud detection systems, enhancing customer trust. Schiphol Airport integrated Kafka to streamline passenger flow and improve travel experiences.

| Industry | Company | Use of Kafka |

| Retail | Migros | Personalizes customer journeys and optimizes stock levels for timely product delivery. |

| Financial Services | Erste Group | Builds robust fraud detection systems, enhancing customer trust and reducing financial risks. |

| Travel & Logistics | Schiphol Airport | Integrates data from various systems to optimize passenger flow and improve travel experiences. |

Kafka's ability to process unbounded data streams in real time has made it indispensable for modern data-driven applications. Its adoption continues to grow as organizations seek scalable and reliable solutions for stream processing.

Modern Advancements in Stream Processing

Distributed Computing and Scalability

Distributed computing has become a cornerstone of modern stream processing frameworks, enabling systems to handle vast amounts of data with improved efficiency and reliability. By distributing workloads across multiple nodes, these frameworks achieve higher scalability and fault tolerance, addressing the limitations of single-node architectures.

A comparison of single-node and distributed architectures highlights the advantages of distributed computing:

| Metric | Single Node Architecture | Distributed Architecture |

| CPU Utilization | 95% | 70% |

| Memory Consumption | 7GB | 3.5GB |

| Processing Time Improvement | N/A | 75% decrease |

| Accuracy Improvement | N/A | 3.5% |

Distributed systems also incorporate advanced mechanisms to enhance performance. For instance:

Checkpointing mechanisms ensure fault tolerance by saving the system's state periodically, allowing recovery in case of failures.

Sample-based repartitioning addresses load imbalance, optimizing throughput and resource utilization.

Scalable communication protocols, such as Gloo and UCX/UCC, outperform traditional options like OpenMPI under high parallelism, ensuring consistent performance.

These advancements have made distributed computing indispensable for stream processing, enabling organizations to process unbounded data streams efficiently and reliably.

Integration with Machine Learning and AI

The integration of machine learning (ML) and artificial intelligence (AI) with stream processing has unlocked new possibilities for real-time analytics and decision-making. By combining the strengths of these technologies, organizations can derive actionable insights from data streams as they occur.

Stream processing frameworks now support real-time model training and inference, allowing businesses to adapt to changing conditions dynamically. For example:

In eCommerce, ML models analyze customer behavior in real time to deliver personalized recommendations.

In cybersecurity, AI-powered anomaly detection systems identify potential threats as they emerge, minimizing response times.

Frameworks like Apache Flink and TensorFlow Extended (TFX) have facilitated this integration by providing APIs and libraries for seamless interaction between stream processing and ML pipelines. These tools enable the deployment of stateful ML models that continuously learn and improve from incoming data.

The synergy between stream processing and AI has transformed industries by enabling predictive analytics, automation, and enhanced decision-making capabilities.

Cloud-Native Stream Processing Solutions

Cloud-native solutions have revolutionized stream processing by offering scalability, flexibility, and cost efficiency. These platforms leverage the power of cloud computing to handle massive data volumes while reducing infrastructure management overhead.

Studies have demonstrated the robustness of cloud-native stream processing deployments:

| Study | Findings |

| Abadi et al. | Cloud-based data warehouses support massive data volumes and complex queries, enabling descriptive analytics at scale. |

| Baldini et al. | Serverless computing enables on-demand data processing, reducing costs and improving resource utilization. |

| Pearson | Robust data governance frameworks ensure data privacy and regulatory compliance in cloud environments. |

| RightScale | Highlights the need for employee training to address the skills gap in cloud technologies. |

Cloud-native platforms, such as Amazon Kinesis and Google Cloud Dataflow, provide features like auto-scaling, serverless architecture, and integration with other cloud services. These capabilities allow organizations to focus on deriving insights rather than managing infrastructure.

The adoption of cloud-native stream processing solutions continues to grow as businesses seek to leverage the scalability and flexibility of the cloud for real-time analytics.

Challenges in Stream Processing Implementation

Managing Latency and Fault Tolerance

Latency and fault tolerance are critical challenges in stream processing. Systems must process vast amounts of data in real time while ensuring uninterrupted operations during failures. Operator pipelining, a technique that allows concurrent data processing across operators, has emerged as a solution to reduce latency. However, achieving fault tolerance remains complex. Frameworks like Apache Flink and Kafka Streams incorporate checkpointing mechanisms to periodically save system states, enabling recovery after failures. Despite these advancements, recovery speed and the performance impact of failures continue to pose challenges.

A study by Vogel et al. (2023) highlights the importance of balancing throughput, latency, and fault tolerance in production systems. For example, Spark and Flink provide high-level abstractions to build scalable applications, but fault recovery often affects performance. Organizations must carefully evaluate these trade-offs to optimize their stream processing frameworks.

| Aspect | Details |

| Study Focus | Fault recovery and performance benchmarks in stream processing frameworks. |

| Key Frameworks | Spark, Flink, Kafka Streams - support high-level abstractions for building scalable applications. |

| Performance Metrics | Throughput, latency, and fault tolerance are critical for production systems. |

| Challenges Identified | Fault tolerance remains challenging; recovery speed and performance impact of failures are open questions. |

Balancing Costs with Performance

Cost efficiency is another significant challenge in stream processing. Organizations often struggle to balance the expenses of on-premises and cloud resources while maintaining optimal performance. Reports indicate that Redpanda offers up to 60% cost savings in fan-in scenarios compared to Confluent, making it a preferred choice for cost-conscious businesses. Additionally, cloud provider discounts and reserved instances can further reduce operational costs.

The global data processing market, valued at $32.4 billion in 2022, is projected to grow at a rate of 15.7% annually until 2030. This growth underscores the increasing demand for efficient and cost-effective stream processing solutions. A survey by Deloitte found that 67% of financial institutions adopting automated data processing systems achieved significant cost reductions. These insights emphasize the importance of selecting the right tools and strategies to balance costs with performance.

Addressing Data Security and Compliance

Data security and compliance are paramount in stream processing, especially when handling sensitive information. Organizations must implement robust measures to protect data from unauthorized access and ensure compliance with regulations like GDPR. Techniques such as data encryption and masking safeguard sensitive information during processing. Continuous monitoring further enhances security by detecting and responding to threats in real time.

Companies like Babylon Health and Bayer have successfully integrated stream processing into their operations while maintaining compliance. Babylon Health ensures GDPR compliance in telemedicine, while Bayer uses real-time analytics to secure clinical trial data. These examples demonstrate how stream processing can be both secure and compliant when implemented with the right strategies.

Babylon Health: Ensures compliance with PII and GDPR regulations in telemedicine operations.

Bayer: Analyzes clinical trial data securely in real time, enhancing compliance and data protection.

Future Trends in Stream Processing

The Role of Edge Computing

Edge computing is reshaping the landscape of stream processing by bringing computation closer to data sources. This approach reduces latency and enhances efficiency, making it ideal for applications requiring immediate responses. For instance, autonomous vehicles rely on edge computing to process sensor data in real time, ensuring safe navigation. Similarly, industrial IoT systems use edge devices to monitor equipment and predict failures before they occur.

By decentralizing data processing, edge computing minimizes the need for constant communication with centralized servers. This not only reduces bandwidth usage but also enhances data security by keeping sensitive information local. As industries adopt edge computing, stream processing frameworks are evolving to support distributed architectures, enabling seamless integration with edge devices.

AI-Driven Automation in Stream Processing

Artificial intelligence is driving automation in stream processing, enabling systems to analyze and act on data without human intervention. AI-powered algorithms can detect patterns, predict outcomes, and trigger automated responses in real time. For example, eCommerce platforms use AI to analyze customer behavior and deliver personalized recommendations instantly. In cybersecurity, AI identifies anomalies in network traffic, preventing potential breaches.

Stream processing frameworks now incorporate machine learning libraries, allowing businesses to deploy AI models directly within data pipelines. This integration accelerates decision-making and enhances operational efficiency. As AI continues to advance, its role in stream processing will expand, unlocking new possibilities for automation and innovation.

Predictions for Industry Transformation

Stream processing innovations are set to transform industries by enabling real-time data-driven decision-making. Emerging technologies allow businesses to process data instantly, adapting to market changes with agility. Predictive analytics empowers companies to forecast trends, optimizing strategies and inventory management. AI-driven analytics provide personalized customer insights, enhancing engagement and driving revenue.

Industries such as healthcare, finance, and retail will experience significant shifts. Real-time patient monitoring will improve healthcare outcomes, while financial institutions will enhance fraud detection systems. Retailers will leverage personalized insights to refine marketing strategies and boost customer satisfaction. These advancements highlight the transformative potential of stream processing across diverse sectors.

Stream processing frameworks have transformed data handling, evolving from early systems like Apache S4 to modern platforms such as Apache Kafka and Flink. These frameworks now deliver low latency, fault tolerance, scalability, and exactly-once processing, enabling industries to harness real-time insights. Applications span diverse sectors, including finance, healthcare, and telecommunications, showcasing their versatility.

| Aspect | Details |

| Emergence of Real-time Processing | Early 2000s saw the rise of systems like Apache S4 and Storm, introducing distributed stream processing. |

| Modern Frameworks | Key frameworks include Apache Kafka, Apache Flink, Apache Spark Streaming, and Amazon Kinesis. |

| Key Features | Low latency, fault tolerance, scalability, and exactly-once processing are critical features. |

| Use Cases | Applications span finance, e-commerce, healthcare, and telecommunications. |

| Future Trends | Serverless architectures, edge computing, and advanced analytics are shaping the future. |

The future of stream processing holds immense promise. Innovations like serverless architectures, edge computing, and advanced analytics will redefine real-time data applications, empowering industries to operate at unprecedented scales and speeds.

FAQ

What is the difference between batch processing and stream processing?

Batch processing handles data in chunks at scheduled intervals, while stream processing analyzes data continuously as it arrives. Stream processing offers lower latency, making it ideal for real-time applications like fraud detection or personalized recommendations.

Which industries benefit the most from stream processing?

Industries like finance, healthcare, eCommerce, and manufacturing benefit significantly. For example, financial institutions use it for fraud detection, while healthcare systems rely on it for real-time patient monitoring. Its versatility makes it valuable across various sectors.

How does stream processing ensure fault tolerance?

Stream processing frameworks use techniques like checkpointing and state replication. These methods save system states periodically, allowing recovery after failures. For instance, Apache Flink and Kafka Streams incorporate these mechanisms to maintain uninterrupted operations.

Can stream processing handle unbounded data streams?

Yes, modern frameworks like Apache Kafka and Flink are designed to process unbounded data streams. They use distributed architectures to scale horizontally, ensuring efficient handling of continuous data flows without performance degradation.

What role does AI play in stream processing?

AI enhances stream processing by enabling real-time analytics and automation. It powers applications like anomaly detection in cybersecurity and personalized recommendations in eCommerce. Frameworks now integrate machine learning libraries to support dynamic model training and inference.