Changes You Should Know in the Data Streaming Space: Takeaways From Kafka Summit 2024

The Kafka Summit, hosted by Confluent, is the preeminent conference in the data streaming sector. We compare this year's event with Current 2023 (previously known as Kafka Summit Americas) to identify the discernible shift in Confluent's strategic vision.

Data streaming is a vibrant and rapidly evolving field, underscored by the IPO of Confluent in 2021. Founded by the original creators of Apache Kafka, Confluent's public offering has magnified attention on this domain. Startups like RisingWave, Redpanda, Materialize, Decodable, and WarpStream have secured significant venture capital to develop next-generation streaming platforms. Meanwhile, established companies are also making notable advances. For instance:

- Databricks has introduced the ambitious Lightspeed project, aiming to revolutionize Spark Structured Streaming and power the Delta Live Table within its lakehouse platform.

- Snowflake has debuted Dynamic Tables and Snowpipe Streaming, efforts to seamlessly integrate batch and streaming pipelines.

- MongoDB Atlas has entered the stream processing arena with its new platform, now in public preview .

The Kafka Summit, hosted by Confluent, is the preeminent conference in the data streaming sector, attracting a global community of enthusiasts and professionals. Having attended every Kafka Summit post-COVID, we've witnessed first-hand the ongoing evolution in this space. The 2024 Kafka Summit in London maintained its reputation as a magnet for data streaming aficionados worldwide. Confluent’s recap of the event highlighted several innovations, including the GA of managed Flink, the newly released Tableflow, Kora, and more.

But which topics should you pay special attention to? We compare this year's event with Current 2023 (previously known as Kafka Summit Americas) to identify the discernible shift in Confluent's strategic vision.

The Universal Data Product: A New Paradigm

The concept of a data product represents a groundbreaking shift in the data landscape. It enables users to develop applications seamlessly atop a robust data foundation, eliminating concerns about the intricate data infrastructure beneath. Traditionally, data systems like Spark and Snowflake, managed by data infrastructure teams, have served as the backbone for analytical processes. These teams create layers of abstraction, allowing data service colleagues to utilize APIs without needing to navigate the complexities of the underlying infrastructure.

Confluent aims to redefine the essence of the data product, choosing Kafka as its foundation rather than relying on batch systems such as Spark and Snowflake. This strategic shift is designed to simplify user interaction with data infrastructure, promoting Kafka from a mere tool for data movement to a comprehensive solution for data management and storage.

Data, with its inherent value, acts as a magnet, attracting more interactions and, consequently, generating increased revenue. But how does Confluent set itself apart from competitors like Snowflake and Databricks? Shaun Clowes, the Chief Product Officer at Confluent, sheds light on this distinction. While analytical data products have matured, they often overlook the dynamism of real-time data events. Confluent's vision extends beyond the analytical domain to encompass the operational sphere, capturing data at its source. This approach not only preserves the immediacy of operational data but also integrates it seamlessly into analytical processes.

Should Confluent realize this ambitious vision, it stands to redefine its market position significantly, potentially surpassing industry giants like Snowflake and Databricks in both value and influence.

Slide deck from Current 2023: Confluent articulated their ambition to develop data products leveraging Kafka as the foundational technology.

Slide deck from Current 2023: Confluent articulated their ambition to develop data products leveraging Kafka as the foundational technology.

Slide deck from Current 2023: Confluent articulated their ambition to develop data products leveraging Kafka as the foundational technology.

Slide deck from Current 2023: Confluent articulated their ambition to develop data products leveraging Kafka as the foundational technology. Slide deck from Kafka Summit London 2024: Instead of building yet another analytical data products, Confluent wants to innovate universal data product.

Slide deck from Kafka Summit London 2024: Instead of building yet another analytical data products, Confluent wants to innovate universal data product.

Slide deck from Kafka Summit London 2024: Instead of building yet another analytical data products, Confluent wants to innovate universal data product.

Slide deck from Kafka Summit London 2024: Instead of building yet another analytical data products, Confluent wants to innovate universal data product.

Kafka and Kora: Pioneering Data Streaming Innovation

At its core, Confluent is synonymous with Kafka. Its primary business revolves around enhancing and promoting Kafka, a technology deemed indispensable by virtually every modern company. The allure of open-source Kafka lies not only in its functionality but also in its critical role across various industries. Recent developments have introduced notable enhancements, including the two-phase commit mechanism and improved client observability, as detailed in KIP-939. These advancements promise to revolutionize data consistency maintenance in Kafka-powered systems, particularly by facilitating atomic transactions across diverse databases and Kafka topics. The implementation of such features, like the "dual write" technique, aims to achieve exactly-once semantics (EOS) in distributed environments, a significant milestone for data integrity.

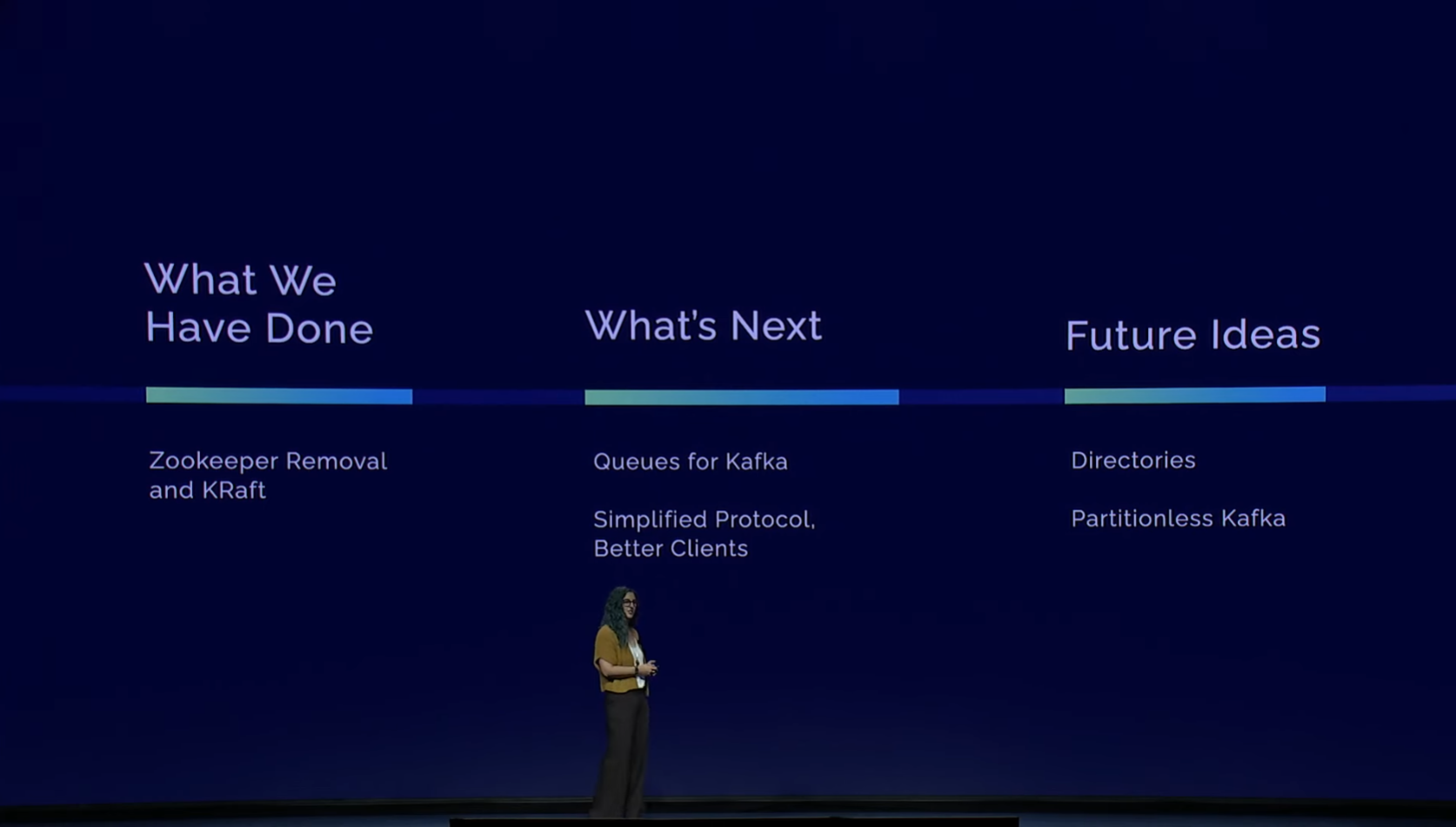

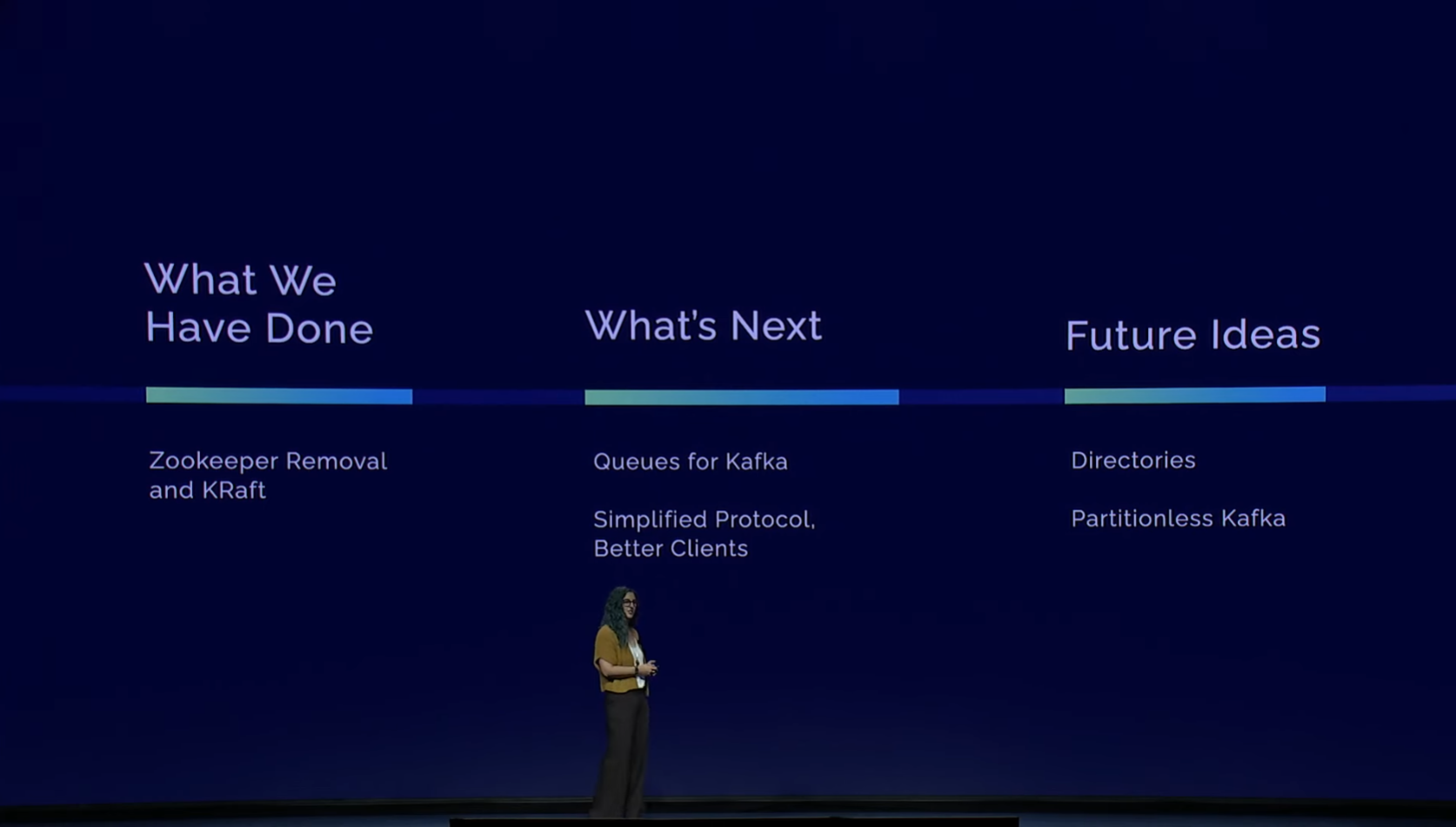

Slide deck from Current 2023: Kafka’s roadmap.

Slide deck from Current 2023: Kafka’s roadmap.

Slide deck from Current 2023: Kafka’s roadmap.

Slide deck from Current 2023: Kafka’s roadmap. Slide deck from Kafka Summit London 2024: Two-Phase Commit and Client Observability are the two newly added features.

Slide deck from Kafka Summit London 2024: Two-Phase Commit and Client Observability are the two newly added features.

Slide deck from Kafka Summit London 2024: Two-Phase Commit and Client Observability are the two newly added features.

Slide deck from Kafka Summit London 2024: Two-Phase Commit and Client Observability are the two newly added features.As Kafka's marketplace expands, the competition intensifies, with new entrants aiming to carve out their share. A recurring challenge for users has been Kafka's cost. In response, innovative solutions have emerged to alleviate financial burdens. Redpanda, for example, employs a tiered storage strategy to slash cloud expenses significantly. WarpStream leverages cloud object stores like S3 to offer a Kafka-compatible platform, while Upstash introduces a serverless Kafka model, enhancing accessibility and cost-efficiency.

In response to these market dynamics, Confluent has unveiled the Kora engine. This groundbreaking initiative is set to reinforce Confluent’s leadership through features like multi-tenancy, serverless abstraction, and a separation of networking, storage, and computing layers, coupled with automated operations and universal access. Kora is poised to solidify Confluent's dominant position by offering a solution that is not only up to 16 times faster than traditional Kafka but also more cost-effective, addressing one of the most pressing concerns of Kafka users.

Slide deck from Current 2023: Kora is 10X faster than open-source Kafka.

Slide deck from Current 2023: Kora is 10X faster than open-source Kafka.

Slide deck from Current 2023: Kora is 10X faster than open-source Kafka.

Slide deck from Current 2023: Kora is 10X faster than open-source Kafka. Slide deck from Kafka Summit London 2024: Kora is now 16X faster than open-source Kafka.

Slide deck from Kafka Summit London 2024: Kora is now 16X faster than open-source Kafka.

Slide deck from Kafka Summit London 2024: Kora is now 16X faster than open-source Kafka.

Slide deck from Kafka Summit London 2024: Kora is now 16X faster than open-source Kafka.

Blending Batch and Streaming: A Unified Approach

The perception of streaming as a niche often emerges when comparing data streaming companies with their batch processing counterparts. While it's debatable whether this viewpoint holds true in 2024, the necessity for batch processing solutions remains undisputed, and their relevance is unlikely to wane even as the streaming market expands.

Confluent's strategy transcends the traditional boundaries of the streaming domain, aiming to merge the realms of batch and streaming. This fusion is intended to capture market share across both operational and analytical segments. But what's the method behind this visionary approach?

A significant shift was observed at Current 2023, where Confluent heavily emphasized Flink's dual capabilities in batch and streaming, its potential as a streaming warehouse, and its compatibility with data lake technologies like Iceberg, Paimon, and Hudi. Interestingly, Delta Lake was not mentioned. Fast forward to this year, Confluent has pivoted its focus towards Iceberg, championing it as the cornerstone of its data lake strategy, backed by heavyweight supporters like AWS and Snowflake.

This move towards Iceberg is not merely an integration but a profound transformation in Confluent's strategy. The introduction of TableFlow marks a pivotal advancement, enabling seamless integration of Kafka data into data lakes, warehouses, or analytics engines as Apache Iceberg tables. Traditionally, bridging operational data with analytical insights has been fraught with complexity, cost, and fragility. Confluent's TableFlow aspires to streamline this integration, promising a more cohesive data ecosystem.

As TableFlow reaches maturity, Confluent positions itself to challenge industry giants like Snowflake and Databricks directly. This bold step not only reaffirms Iceberg's status as the preferred data lake format but also signifies Confluent's ambition to redefine the data landscape.

Slide deck from Current 2023: Flink brings mixed execution mode and has the potential to be the streaming warehouse.

Slide deck from Current 2023: Flink brings mixed execution mode and has the potential to be the streaming warehouse.

Slide deck from Current 2023: Flink brings mixed execution mode and has the potential to be the streaming warehouse.

Slide deck from Current 2023: Flink brings mixed execution mode and has the potential to be the streaming warehouse. Slide deck from Kafka Summit London 2024: Confluent now fully embraces Apache Iceberg.

Slide deck from Kafka Summit London 2024: Confluent now fully embraces Apache Iceberg.

Slide deck from Kafka Summit London 2024: Confluent now fully embraces Apache Iceberg.

Slide deck from Kafka Summit London 2024: Confluent now fully embraces Apache Iceberg.

The Rise of GenAI

GenAI has emerged as the quintessential buzzword of 2024, capturing the attention of the tech world. Engaging in discussions about GenAI has become almost a prerequisite to staying relevant in the rapidly advancing tech landscape.

At Current 2023, Confluent unveiled its AI ambitions, albeit in broad strokes. The discourse primarily revolved around the potential of data streaming platforms to facilitate real-time data ingestion and processing, leaving much to the imagination regarding specifics.

By the time of the Kafka Summit London 2024, Confluent's narrative around GenAI had gained clarity and depth. The conversation evolved beyond the basic premise of transferring data between operational databases and vector databases. Confluent articulated a more sophisticated vision, incorporating the use of Flink for invoking external vector embedding services, thus marking a significant step forward in their AI strategy.

Despite this progress, the roadmap for implementing Confluent's GenAI initiatives remains somewhat nebulous, lacking detailed applications and real-world use cases. Furthermore, the challenge of handling unstructured data—such as text, images, videos, and speeches—for model training persists. The extent to which Confluent's solutions can effectively bridge the gap between structured and unstructured data realms is yet to be fully understood, leaving a space ripe for innovation and exploration.

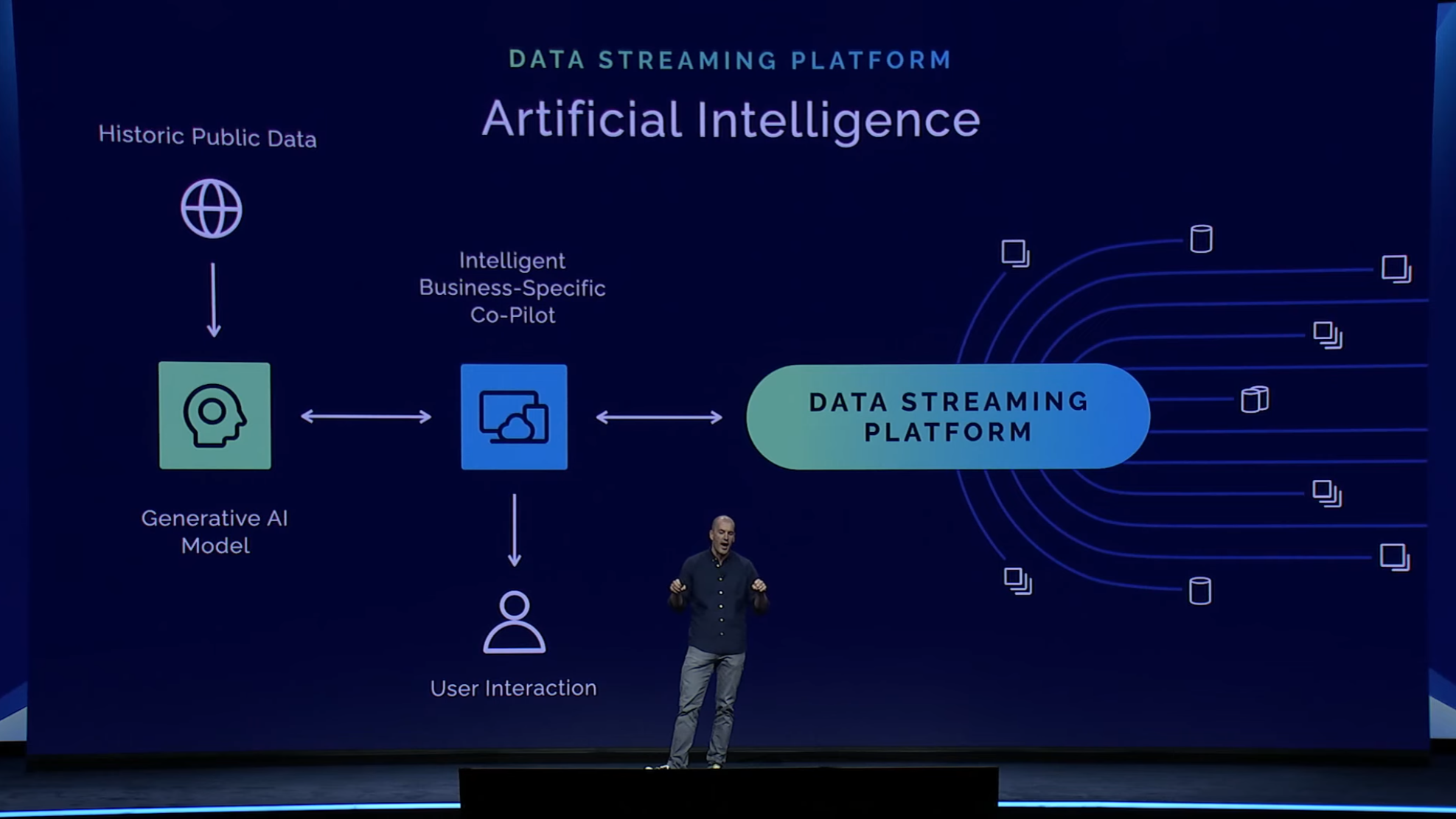

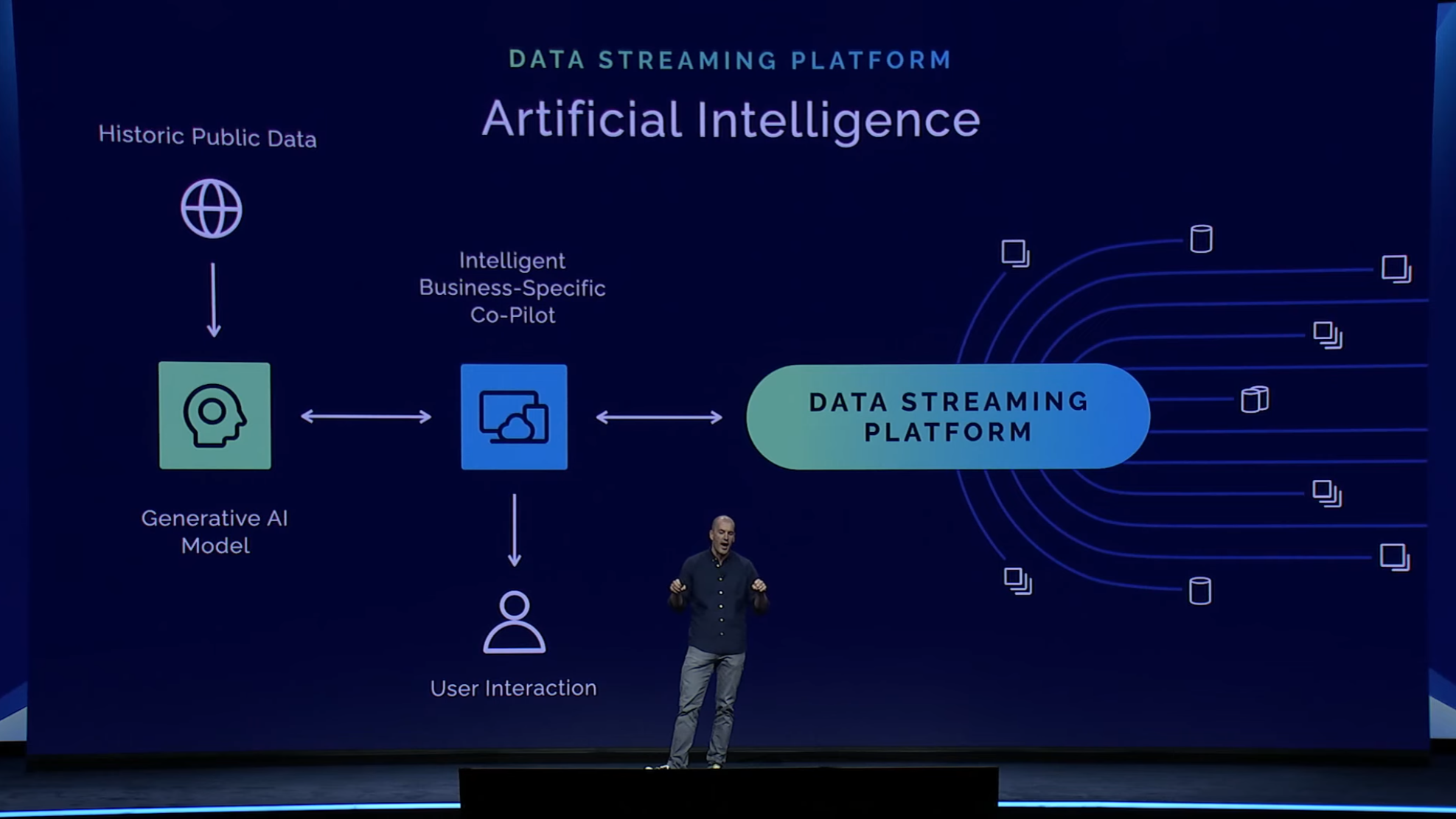

Slide deck from Current 2023: the debut of Confluent’s AI dream.

Slide deck from Current 2023: the debut of Confluent’s AI dream.

Slide deck from Current 2023: the debut of Confluent’s AI dream.

Slide deck from Current 2023: the debut of Confluent’s AI dream. Slide deck from Kafka Summit London 2024: The AI dream is now fleshed out with Flink and vector embedding service.

Slide deck from Kafka Summit London 2024: The AI dream is now fleshed out with Flink and vector embedding service.

Slide deck from Kafka Summit London 2024: The AI dream is now fleshed out with Flink and vector embedding service.

Slide deck from Kafka Summit London 2024: The AI dream is now fleshed out with Flink and vector embedding service.Conclusion

To encapsulate Confluent’s strategic focus, four key terms come to mind: high-level abstraction, cost efficiency, unified systems, and GenAI. These pillars underscore a broader shift in technology preferences and needs—towards tools that are simultaneously simpler and more potent, cost-effective, and enhanced by AI to streamline daily tasks.

Far from being a niche, streaming technology represents both the current landscape and the future trajectory of data management and analysis.