Use cases_

Real-time ETL

Enable immediate data processing, support event-driven architectures, and enhance operational efficiency and responsiveness.

Request a demo

Request a demo

Introduction

Real-time ETL (extract, transform, and load) refers to the practice of continuously extracting, transforming, and moving data among different systems as new data is generated.

Real-time ETL pipelines are a key part of modern, event-driven apps. They enable immediate data processing, responsive decision-making, and optimized operations. These capabilities are crucial for success in today's fast-paced, data-driven business world.

Technical challenges

Data volume and velocity: Real-time ETL pipelines must handle high volumes of data arriving at rapid speeds, requiring robust infrastructure and efficient processing capabilities.

Low latency requirements: Real-time ETL demands extremely low latency between data ingestion and availability for analysis, often requiring sub-second processing times.

Complex transformations: Performing complex data transformations in real-time can be computationally intensive and difficult to optimize.

Technology integration: Integrating various technologies and tools required for real-time processing, such as stream processing engines, message queues, and real-time databases.

Fault tolerance and reliability: Maintaining system reliability and implementing effective error handling is crucial, as any downtime or data loss can have immediate impacts.

Scalability: Real-time ETL systems need to scale efficiently to handle increasing data volumes and velocities without compromising performance.

Handling schema changes: Adapting to changes in data structure or schema in real-time without disrupting the pipeline.

Why RisingWave?

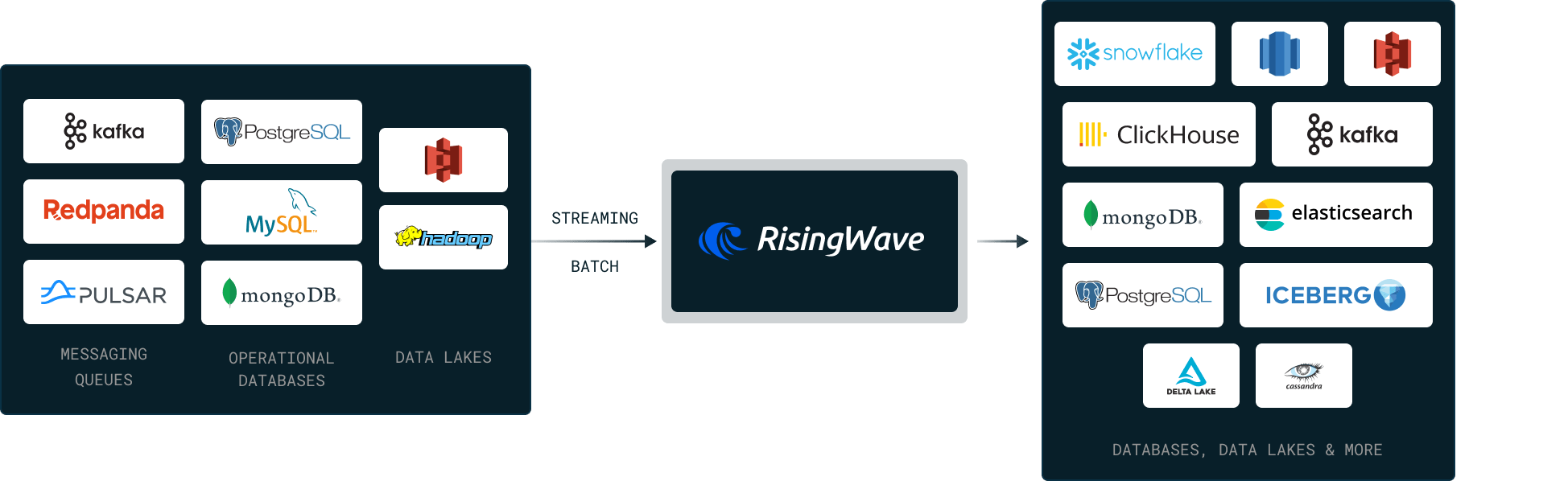

Extract: RisingWave ingests large volumes of high-velocity data of different formats efficiently from popular databases, messaging systems, and data platforms.

Transform: RisingWave provides flexible and expressive streaming SQL and powerful User-Defined Functions. Users can define intricate data transformation rules to suit their specific requirements.

Load: RisingWave can export processed data to downstream systems like databases, warehouses, or queues. This data is ready for analytical queries or operational workflows.

Scaling is straightforward with RisingWave. You can add new nodes without downtime.

By integrating RisingWave with a semantic layer like Cube, users can turn results into APIs, and seamlessly connect to their event-driven systems.

Rapid recovery from failures. With the compute and storage decoupled in RisingWave, recovery simply requires refetching data from storage. This allows data processing jobs to be restored instantly in case of failures.

Sample code

RisingWave allows users to continuously extract, transform, and load data from data sources to destinations.

Step

1

Create a source in RisingWave to receive event streams from your Kafka topic.

-- Ingest event streams from Kafka

CREATE SOURCE IF NOT EXISTS cart_event (

cust_id VARCHAR,

event_time TIMESTAMP,

item_id VARCHAR

)

WITH (

connector='kafka',

topic='cart-events', ...

) FORMAT PLAIN ENCODE JSON;

Step

2

Create a table in RisingWave to capture the latest product catalog from PostgreSQL.

-- Ingest CDC from PostgreSQL

CREATE TABLE product_catalog (

item_id varchar,

name varchar,

price double precision,

PRIMARY KEY (item_id),

)

WITH (

connector = 'postgres-cdc',

hostname = '127.0.0.1', ...

);

Step

3

Configure a sink in RisingWave to perform enrichment on the data and directly transfer the enriched stream to another Kafka topic.

-- Perform enrichment and directly sink to Kafka

CREATE SINK data_enrichment AS SELECT

c.cust_id,

c.event_time,

p.name,

p.price

FROM

cart_event c

JOIN

product_catalog p

ON

c.item_id = p.item_id

WITH (

connector='kafka',

topic='enriched-cart-events', ...

)

FORMAT PLAIN ENCODE JSON;

Try Now

Try Now